Machine Learning

Machine learning is playing an ever-increasing role in areas of electrical and computer engineering, including signal and image processing, robotics, communications, and many more. This Focus Path will teach students the foundations of machine learning methods and how to apply them in a variety of modern engineering systems.

The typical progression of courses in this focus path is:

- 1st or 2nd year suggested starting point: ECE 2410 Introduction to Machine Learning. This course introduces students to the basics of machine learning and lets you get started playing with ML systems without requiring any math background.

- 2nd year core ECE courses: ECE 2700 Signals and systems forms the foundation of many ML concepts.

- 2nd or 3rd year APMA courses: APMA 3080 Linear Algebra and APMA 3100 Probability are the math background on which all the upper-level ML courses will build.

- 3rd year ML course: ECE 3502 Foundations of Data Analysis provides the foundations for modern data analysis and machine learning and introduces students to recent topics in machine learning such as deep neural networks.

- 4th year elective courses: There are many exciting courses that we offer once you have the basics down! Check out some of the previous course websites below.

Gateway Courses

These course are a good entry place for the machine learning focus path

Learn about and experiment with machine learning algorithms using Python. Applications include image classification, removing noise from images, and linear regression. Students will collect and interpret data, learn machine learning theory, build systems-level thinking skills required to strategize how to break the problem down into various functions, and to implement, test and document those functions.

Prerequisite: CS 111X

This course is an introduction to the foundations behind modern data analysis and machine learning. The first part of the course covers selected topics from probability theory and linear algebra that are key components of modern data analysis. Next, we cover multivariate statistical techniques for dimensionality reduction, regression, and classification. Finally, we survey recent topics in machine learning, in particular, deep neural networks.

Prerequisites: You should be comfortable programming in Python (CS 2110 or equivalent is sufficient)

Elective Courses

This course explores the intricacies of AI hardware, including the current landscape and anticipating the necessary developments in response to AI's rapid growth and widespread integration across all computing tiers. Through this exploration, you will gain an understanding of both the existing technologies and the future challenges in AI hardware design and implementation.

Unlike many of the other courses in this focus path, AI hardware will focus more on hardware than the algorithms or mathematical principles behind ML.

Prerequisites: ECE 2330 DLD or CS 2130 CSO1.

Suggested prerequisites or corequisites: Embedded, ECE 3780 Foundations of Data Analysis, ECE 4435 Computer Architecture, and/or ECE 4332 VLSI

The objective of this course is to provide the basic concepts and algorithms required to develop mobile robots that act autonomously in complex environments. The main emphasis is on mobile robot locomotion and kinematics, control, sensing, localization, mapping, path and motion planning.

This course covers:

- Robot poses and transformations

- Kinematics

- PID controls and motion planning techniques

- Estimation, localization, and mapping

- using Gaussian based methods

- Kalman filter

- Particle filter

- SLAM (simultaneous localization and mapping)

- Quadrotor dynamics

- Swarming

- Planning under uncertainty

For some of the cool projects discussed in the course, see the Nicola Bezzo's website here.

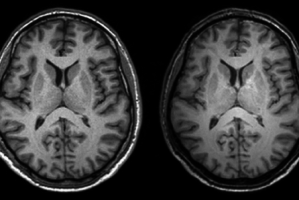

Description: This course focuses on an in-depth study of advanced topics and interests in image data analysis. Students will learn practical image techniques and gain mathematical fundamentals in machine learning needed to build their own models for effective problem solving. Topics of image denoising/reconstruction, deformable image registration, numerical analysis, probabilistic modeling, data dimensionality reduction, and convolutional neural networks for image segmentation/classification will be covered. The main focus might change from semester to semester. The graduate students (ECE/CS 6501) will be given additional programming tasks and more advanced theoretical questions.

Prerequisite: CS 2130 and APMA 3080 Linear Algebra.

Mathematical background in linear algebra, multivariate calculus, probability and statistics, and programming skills are required in this class.

Description: An introductory course offers a broad overview of the main techniques in machine learning. Students will study the basic concepts of advanced machine learning methods as well as their theoretical background. Topics of learning theory (bias /variance trade-offs; VC theory); supervised learning parametric / nonparametric methods, Bayesian models, support vector machines, neural networks); unsupervised learning (dimensionality reduction, kernel tricks, clustering) and reinforcement learning will be covered. The graduate students (ECE 6502 / CS 6316) will be given additional programming tasks and more advanced theoretical questions.

Prerequisites: Mathematical background in linear algebra, multivariate calculus, probability and statistics, and programming skills are required in this class.

Course objective: Graphs/networks are often used to represent a plethora of real-world phenomena: social relations among online users, hyperlinks among webpages, biological interactions among genes, brain activities among neurons, to name a few. How can we understand, characterize, and extract actionable knowledge from the deluge of graph data, to benefit high-impact applications from different disciplines? This course will introduce the fundamental problems and cover the recent research advances in analyzing and mining large-scale graphs. It will also discuss the practical applications and the broad impacts of graph mining algorithms in diverse settings (e.g., social media, e-commerce, education, and security). The following topics will be covered in the course: graph essentials, network measures, network models, data mining essentials, community analysis, information diffusion, recommendation, network representation learning, and graph neural networks.

Prerequisites: There are no official prerequisites for this course, but students are expected to : (1) have basic knowledge about data mining and machine learning; (2) be familiar with linear algebra, discrete mathematics, and statistics; (3) be comfortable to read research papers and give presentations; (4) have good programming skills, e.g., Python, C/C++, Java, Matlab, and R.

The course provides an in-depth understanding of matrix analysis concepts, algorithms, and applications, including eigenvalues and eigenvectors, linear transformation, similarity transformations, commonly used factorizations, canonical forms, and Hermitian and symmetric

matrices. In particular, we will illustrate these concepts with specific applications in machine learning, control, signal processing, and optimization.

Welcome! In this course, we’ll study estimation and machine learning from a probabilistic point of view.

Why a probabilistic view? Information and uncertainty, which underlie both fields, can be represented via probability in a robust and versatile way. Unknown quantities can be cast as random variables and their relationships to available information as joint distributions. This provides a unifying framework for setting up estimation and machine learning problems, stating our assumptions clearly, designing methods, and evaluating performance.

What topics will we study? We will start with estimation, which can be defined as the problem of learning about the world from data (e.g., finding the chance of getting a disease given one’s genetic make-up) or drawing conclusions about relationships (e.g., what are the best predictors of academic success?). We will then learn about machine learning problems such as regression and classification, where the goal is to predict an unknown quantity, e.g., the price of a house, based on some relevant information. We will also learn how to deal with situations when part of the data is missing. Finally, we will discuss computational methods, which help tackle difficult problems via approximation.

Pre-requisites:

- Fluency in basic probability (e.g., APMA 3100) is needed for the course. You should be comfortable with 70-80% of Chapter 0. You can also refer to these pages I developed for a different course to review probability, although these don’t cover everything we’ll need:

- Familiarity with linear algebra.

- The programming exercises are based on Python.

Note to Undergraduate students: You do not need instructor permission to enroll in this course. But fluency in probability is an important prereq and if your foundation in probability is not strong, you will not be able to fully benefit from the course.

An introduction to digital signal processing. Topics include discrete-time signals and systems, application of z-transforms, the discrete-time Fourier transform, sampling, digital filter design, the discrete Fourier transform, the fast Fourier transform, quantization effects and nonlinear filters.

Prerequisite: ECE 2700 (Signals and Systems).

The prerequisite is in the process of being updated to be CS 2130. You may take this course without ECE 2700.

Course objective: Over the course of the last twenty years, convex optimization has firmly established itself as an indispensable tool for solving a plethora of design and analysis problems arising in data mining, machine learning, signal processing, wireless communications, control systems, etc. This course will primarily serve to introduce students to the key concepts in convex optimization theory with the goal of enabling them to formulate and solve various convex optimization problems arising in engineering, data science, and machine learning. Non-convex optimization techniques in deep learning will also be introduced.

Prerequisites: Solid background in linear algebra (vector spaces, the four fundamental subspaces of a matrix, positive-definiteness, eigen-value and singular-value decompositions, linear least-squares), basic knowledge of probability and multivariate calculus, familiarity with coding in a high-level language (e.g., Matlab, Python).

What our students say ECE 3502: Foundations of Data Analysis (FoDA)

" I really enjoyed taking Foundations of Data Analysis! It was an amazing introductory course into commonly used algorithms when doing classification tasks. The architecture and high-level implementation of neural networks were also covered near the end of the semester. I hadn’t taken Intro to Machine Learning before this class, yet the information was still very easy to digest without having an ML background. Python is pretty heavily used in this class throughout the assignments, so as long as you have strong skills in Python, I would highly recommend taking this class! I continued to take Machine Learning in Image Analysis the following semester, and would also highly recommend this as well. The class focuses more on image classification tasks, such as ways to perform image segmentation and image denoising. I found that taking these classes back-to-back was really beneficial since this class expanded on a lot of concepts that were taught in FoDA, so there was a nice sense of continuity. We would also frequently review published papers as a part of this class, and it was really interesting to see what work was currently being researched in this domain. Even if you don’t intend to pursue the route of ML in the future, I still highly recommend these two classes if it at all piques your interest! These were definitely two of my favorite electives that I have taken at UVA, and I’ve learned so much from them as well." - Student from Foundations of Data Analysis

ECE 3502: Foundations of Data Analysis (FoDA)

"Foundation of Data Analysis gave a great foundation for learning ML processes" - Student from Foundations of Data Analysis

ECE 4750: Digital Signal Processing

"The professor is great, the course load is not overly demanding, and it is all around a very enjoyable class." - Spencer Hernandez, Class of 2024

Machine Learning FAQ

- To do ML, you need to understand whatever system you are working with. ML in ECE will teach you how to apply ML to areas like chip design, robotics, and wireless communications. We will bridge ML with engineering applications by emphasizing physics-informed and model-based methods.

- The ECE version of ML will teach the mathematical background behind ML more so than a data science major might, while the data science version is likely to cover feature engineering more than we will.

Math elective:

- Linear algebra is required for the Machine Learning for Engineers course, so that should be your math elective

- If you have space for additional math electives, an advanced probability course would be a great fit

ECE electives

- Other than those listed above for the focus path, electives in the signal processing and control systems areas are great for those interested in the roots of the ML field.

- You should take any other ECE electives that interest you and, as you take the course, think about how you might be able to apply ML in that field!

Every EE degree requires a 1.5 credit lab, so you will need to take one to complete the major. However, there is no specific lab required for this focus path so you are welcome to select from any of the labs offered in ECE.

Some faculty in this area:

You are likely to see these faculty as the instructors for elective courses. Click on a name to visit a website and read about the cool research being done in this area at UVA!

Scott T. Acton

Professor Acton’s laboratory at UVA is called VIVA - Virginia Image and Video Analysis. They specialize in biological image analysis problems. The research emphases of VIVA include machine learning for image and video analysis, AI for education, tracking, segmentation, and enhancement.

Caroline Crockett

Professor Crockett received a B.S. degree in electrical engineering from the University of Virginia in 2015 and a Ph.D. degree in electrical engineering from the University of Michigan in 2022. Before entering graduate school, she worked in goverment contracting as a systems and image quality engineer. She is a member of IEEE and ASEE.

Farzad Farnoud

Tom Fletcher

Mathews Jacob is is an expert in machine learning algorithms for biomedical imaging. He develops advance magnetic resonance imaging (MRI) methods for brain, lung, and heart applications.

Jundong Li

Li's research interests are generally in AI, Data Mining, and Machine Learning. As a result of his research work, he has published over 150 papers in high-impact venues. He has won several prestigious awards, and his work is supported by NSF, DOE, ONR, JP Morgan, Netflix, Cisco, and Snap.

Nikolaos Sidiropoulos

Nikos Sidiropoulos earned his Ph.D. in Electrical Engineering from the University of Maryland–College Park, in 1992.

Cong Shen is an Associate Professor in the Department of Electrical and Computer Engineering at UVa. He obtained his PhD degree from UCLA. His research interests are in wireless communications, networking, in-context learning, reinforcement learning, and federated learning.

Miaomiao Zhang

Professor Zhang completed her PhD in computer science at the University of Utah. She was a postdoctoral associate in the Computer Science and Artificial Intelligence Laboratory (CSAIL) at the Massachusetts Institute of Technology.