As Alzheimer’s and other dementia patients lose their memory, they sometimes lash out in their anger and confusion. That creates a real problem for the millions of stressed-out families in the United States struggling to provide them care.

But now there’s the first sign that AI can help caregivers cope.

The University of Virginia School of Engineering and Applied Science has successfully led 11 home deployments of a new voice monitoring system that can alert family members providing Alzheimer’s care when they themselves are experiencing anger and conflict, then offer them ways to adjust.

UVA worked in conjunction with The Ohio State University and the University of Tennessee on the machine learning tool with an ear for mood.

“While this first sample size was small, the caregivers do claim the machine learning system helped relax them,” said real-time and embedded systems pioneer John Stankovic, the BP America Professor Emeritus at UVA who was a principal investigator on the project.

Stankovic’s pitch to the National Science Foundation, which partnered with the National Institutes of Health to jointly fund the $735,000 research project, was this: calmer and more in-control caregivers have happier interactions, which in turn lead to greater quality of life for patients and fewer medical costs.

Stankovic provided a project completion update for the NSF and journalists earlier this month.

How it Works: Listening for Mood

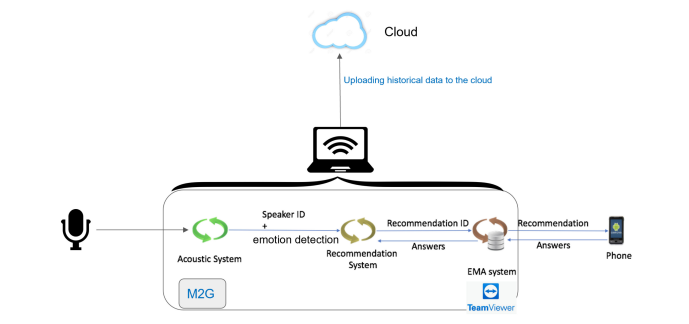

Stankovic’s team of Ph.D. students implemented their Patient-Caregiver Recommendation, or PCR, research in homes across the country over a period of four months. The “ear” listening was an external room microphone paired with a machine learning model designed to infer the underlying nature of verbal interactions in the homes.

The system, which must be trained on the sound of patient and caregiver voices, uses pitch, volume, tone and frequency to recognize both person and mood.

Unwanted noise is no obstacle. The system works even when the television is on or when new people enter.

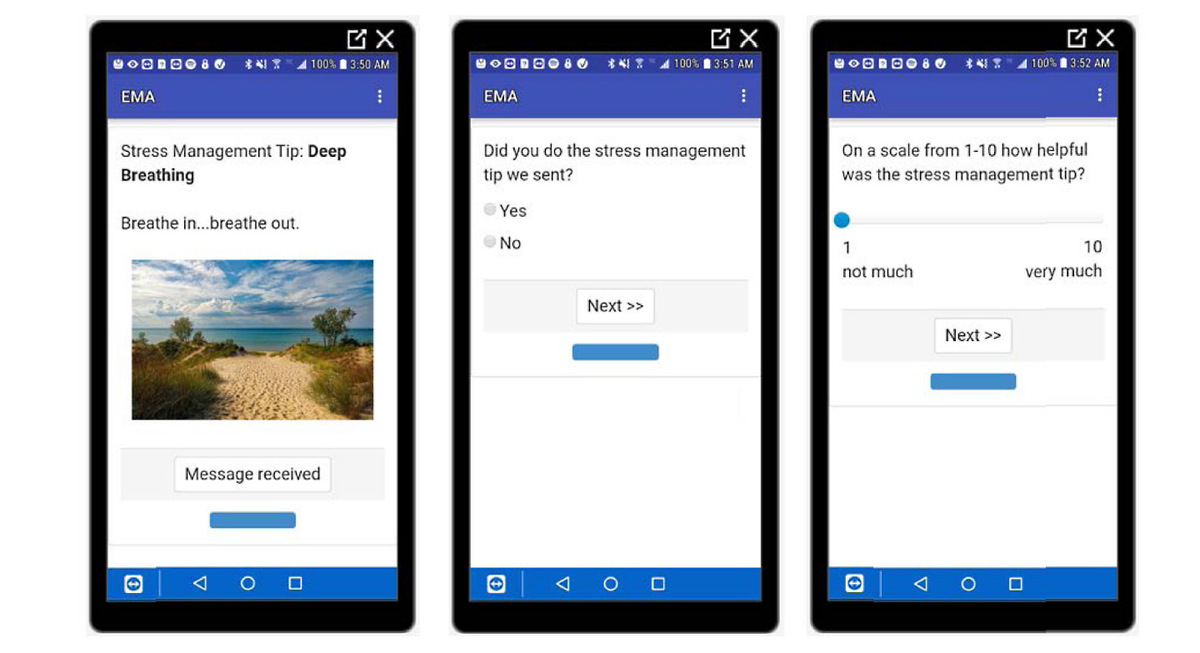

Far from the audio equivalent of a nanny cam, the system doesn’t create a record of the content of anyone’s speech. Instead, all the data becomes immediately coded for emotional states. If anger registers in the caregiver’s voice, the system provides that person immediate recommendations.

Stankovic’s co-principal investigator, Hongning Wang, and Ph.D. students developed the “context aware” system. The advice, delivered via smartphone, adapts over time based on what works for the caregiver. The system decides what to send as recommendations using a federated learning framework – “an approach that allows learning privately across different home deployments,” Wang said.

Maybe it’s time to go for a walk? Or do some yoga? Even a person just realizing in the moment that they are overwhelmed is helpful, Stankovic said.

He added that, of all the automated tools, “Encouraging words in the morning were the most valuable.”

Partners in Progress

The School of Engineering partnered with The Ohio State University and the University of Tennessee on the geriatrics and psychology aspects of the research, respectively, which has included follow-on student training in use of the tool.

The system dovetails with two of UVA’s Grand Challenges -- to make the world a better place through digital technology and through precision health.

Ironically, the recent pandemic helped facilitate the possibility of making the technology available to a growing set of care providers. Stankovic noted that the team conducted the research during COVID-19’s in-person meeting restrictions.

“To handle this issue, we created techniques to be able to box up our system and mail it,” he said. “Caregivers with no technical expertise were able to deploy it and start using it; it took them about an hour.

“This means we can now recruit nationwide. You don’t have to be within driving distance of anyone who is going to deploy your system.”

Stankovic said next steps are to be determined, given his recent retirement, but either associated researchers such as Ashley Gao, a UVA computer science Ph.D. graduate who is now an assistant professor at William & Mary, will pick up the baton, or perhaps other interested parties will. The team published six papers on the system.

“The key technical AI innovations are in the papers, and someone could build on those,” he said.

Stankovic and Wang, currently a visiting professor at UVA, said they would be glad to be contacted via email by those interested in furthering the technology.