A graduate student in professor Nicola Bezzo’s Autonomous Mobile Robots Lab at the University of Virginia School of Engineering and Applied Science was recently part of a team that devised what it believes is a new way for coordinated robot activity to respond when the unexpected happens.

Lauren Bramblett, a U.S. Air Force officer who earned her Ph.D. in systems and information engineering in the summer, was the first author on the paper detailing the strategy, which relies upon robots’ knowledge and beliefs about each other's likely next steps and ability to perform them.

Bramblett presented the paper at the prestigious International Conference on Intelligent Robots and Systems, which was held Oct. 14-18 at the ADNEC Centre in Abu Dhabi, UAE. The paper is slated to appear in the Proceedings of the Institute of Electrical and Electronics Engineers online.

“This research offers a way to modify multi-robot systems so they can complete tasks efficiently and adapt on the go — even when communication between robots is limited or interrupted,” Bramblett said.

A More Effective Algorithmic Approach

Humans deploy robot groups for all sorts of reasons. Schools of robot fish approximate the activity of real fish so that scientists can better understand aquatic systems. Teams of roving robots sow seeds in the ground on large farms. Drone swarms scout for missing people or drop emergency aid following natural disasters.

But like any other system, multi-agent robot systems can fail as a group or faulter individually. One reason might be for lack of communication from a command system. Another might be from the challenges posed by obstacles that deviate from the hypotheticals in planning.

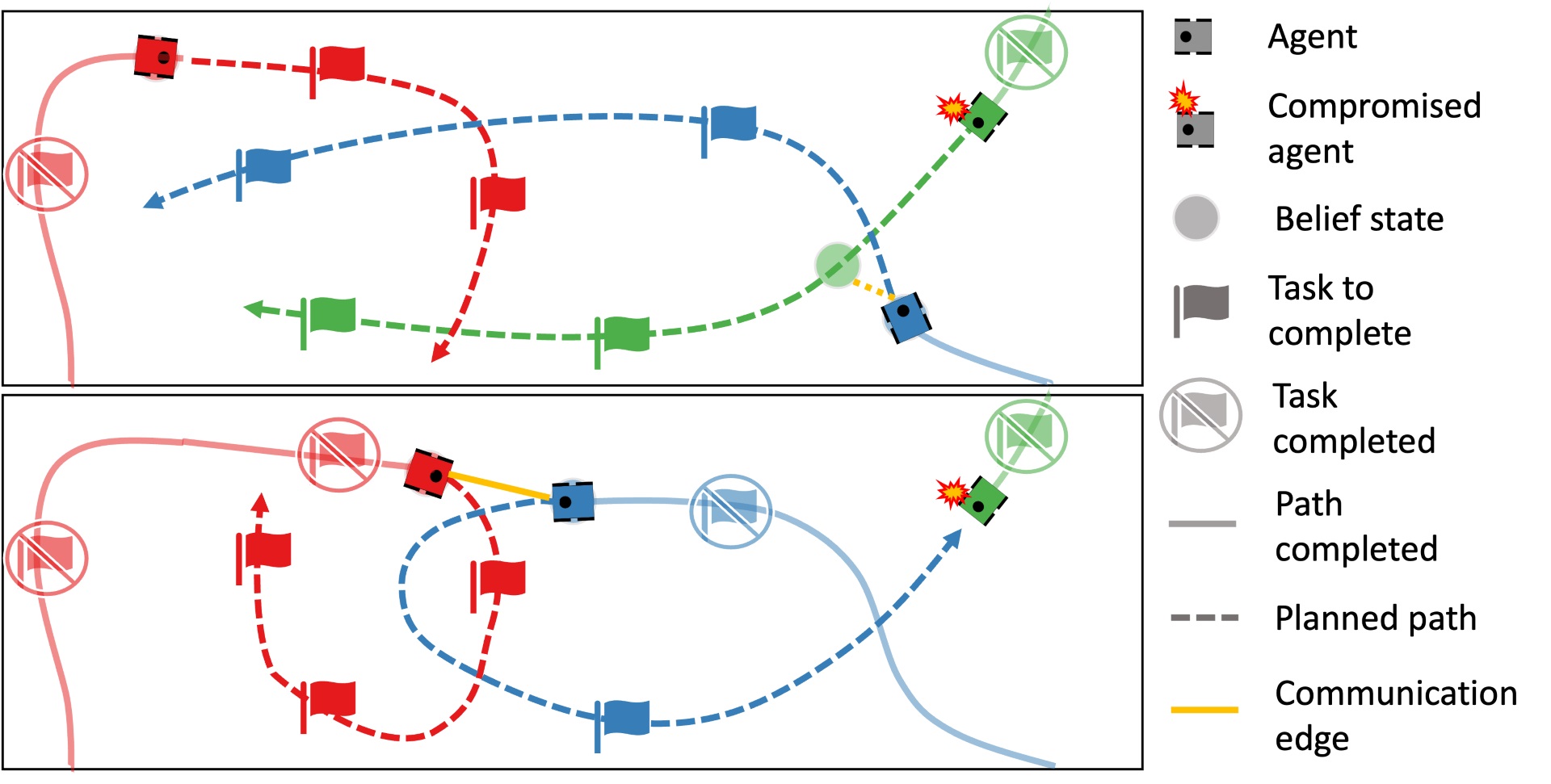

The research, which was in collaboration with Swedish researchers at Malardalen University, starts with the idea that each robot keeps a set of ongoing “beliefs” about other robots in the swarm. They not only assess their co-workers’ conditions, including ability to carry out tasks at any given moment, but also predict their co-workers’ ever-changing goals in the observed environment — what the researchers call “empathy” states.

This decentralized, or “epistemic,” reasoning allows robots to adapt to tasks in real time as conversations occur between them based on their individual sensor data and machine learning programming. That includes reallocating tasks as needed.

Robots are “rewarded” for their intermittent communications by a mathematical algorithm, registering a positive, negative or zero change that indicates how well they are individually improving their performance. Thanks to this intermittent communication, robots can assess if everything is working as expected and, if not, they can decide either to inform others or to try to find the missing teammate.

“This is the first study, as far as we know, to combine theory of mind-based decision-making with real-time task adjustments when communication between robots is spotty,” Bezzo said.

‘On the Fly’ Sims and Crazyflies

In computer simulations of their approach, gaming out the movements of multiple robots working together, the algorithm demonstrated better on-the-fly task completion than traditional task allocation and planning methods.

And the approach performed increasingly better than the comparison group as some robot co-workers were “disconnected” and unable to help complete the tasks.

The algorithm also demonstrated its success practically when deployed on real Crazyflies drones to complete tasks when they encountered unforeseen failures during their run time.

“Robust Online Epistemic Replanning of Multi-Robot Missions,” available in draft form at ARXIV, was authored by Bramblett, Branko Miloradovic, Patrick Sherman, Alessandro V. Papadopoulos and Bezzo.